We tend to consider the web as a tool that individuals use to access info and communicate, but, in reality, the bulk of internet traffic is; machine speech machines.

Don’t we all think about the web to be the place where we are able to get the required info with only one or two clicks? However what’s internet traffic if not machines communicating with alternative machines? A software system application that runs tasks over the web at a rate that might be not possible for a person to realize is known as a bot. Usually, web site owners believe that their website can become targeted by bots – till it happens and it becomes too late for them to require any action. The pain of finding an honest backup to revive your website is dangerous as may websites backups cannot be fixed up. This can be to try and do with the database backups not working properly. Worst still if you do not restore your website inside an affordable time Google can downgrade your ranking and you’ll begin to lose readers and customers.

That’s why you have got to require all the required measures of precaution that may assist you to defend your WordPress website against bots. And once it involves items of recommendation on all things WordPress-related, who better to point out you the way than our very own WordPress experts?

Bots, short for robots, are pc programs that surf websites everywhere the web and perform specific tasks. Like everything else on the web, there are good bots and bad bots. During this article we will explain what bots are and the way to dam bad bots.

The Crucial Difference Between Good And Bad Bots

It’s curious to say that not all bots are classified as ‘bad’. Some even have no bad intentions and serve for functions like indexing pages.

The bot is the catchall name for a software system that “pretends” to be human online. Good bots are utilized by search engines like Google and Yahoo to crawl your web site, find out about it and use such info thus your website will rank within the computer program results. Most likely the foremost ‘good’ bot is Googlebot. Web crawlers like Google’s are considered good bots. Good bots follow the foundations and are useful. For those of you who are not entirely aware of the idea of Googlebot, it’s essentially a crawler that indexes your pages supported the meta tag you used. And indexing means that adding pages into Google search, thus we that coated.

Bad bots are not those to follow the foundations or have any good effects on your website. They are usually accustomed harvest email addresses from websites, that later are utilized by spammers. Bad bots also are accustomed to notice security vulnerabilities in websites. Once vulnerabilities are found by these bad bots, they are later exploited by hackers. They instead exploit online resources on behalf of malicious actors, usually criminals.

It is not possible to dam each bad bots, however, you will block or confuse the worst.

Why does one must protect your WordPress site?

There are totally different actions however your website gets attacked, the foremost common are:

• Brute force attacks on your login page – Bots try different logins and passwords to access your website and bots create many login attempts to consume plenty of resources. Most of those bots are unsophisticated and moving the login page is enough to confuse them

• Comment spam – just like the name says, bots try to post spam comments, even for blog posts wherever comment posting is disabled!

• Sniffing for unsafe themes and plugins – A bot is attempting to access files on your web site.

• Indexing your web – A bot checks all of your pages just like the Google bot. This type of bots is usually operated by companies to gather your data/content for statistics, link profiles, etc.

• Server Memory – Each web-server has limited memory that is shared by all of the sites running thereon. Bad bots can reach server memory for a couple of minutes.

All these attacks generate often way more load on your web server than your regular visitor. The matter with WordPress, or the PHP code that is executed for each page view, is that it uses up memory (RAM). Before you recognize it, your website is down like every other site on the server. The advantage is to limit the number of requests on PHP files and to stay unwanted bots off your website.

Let us place it this way – there is not a shortage of how your website is hacked, however, there’s a limited number of the way to protect yourself. However, we will get to it later. Now, let’s talk

Of course, if you wish your website to operate 24/7, the most effective factor would be to ask for help. Here are the items you will do to protect your WordPress website against bots.

5 Ways To Protect Your Website

We hope that, by now, you have got grasped simply how serious it is. Currently that this subject gets your attention, you will work on the 3 things that may assist you to keep ‘bad’ bots away.

1. Block dangerous bots

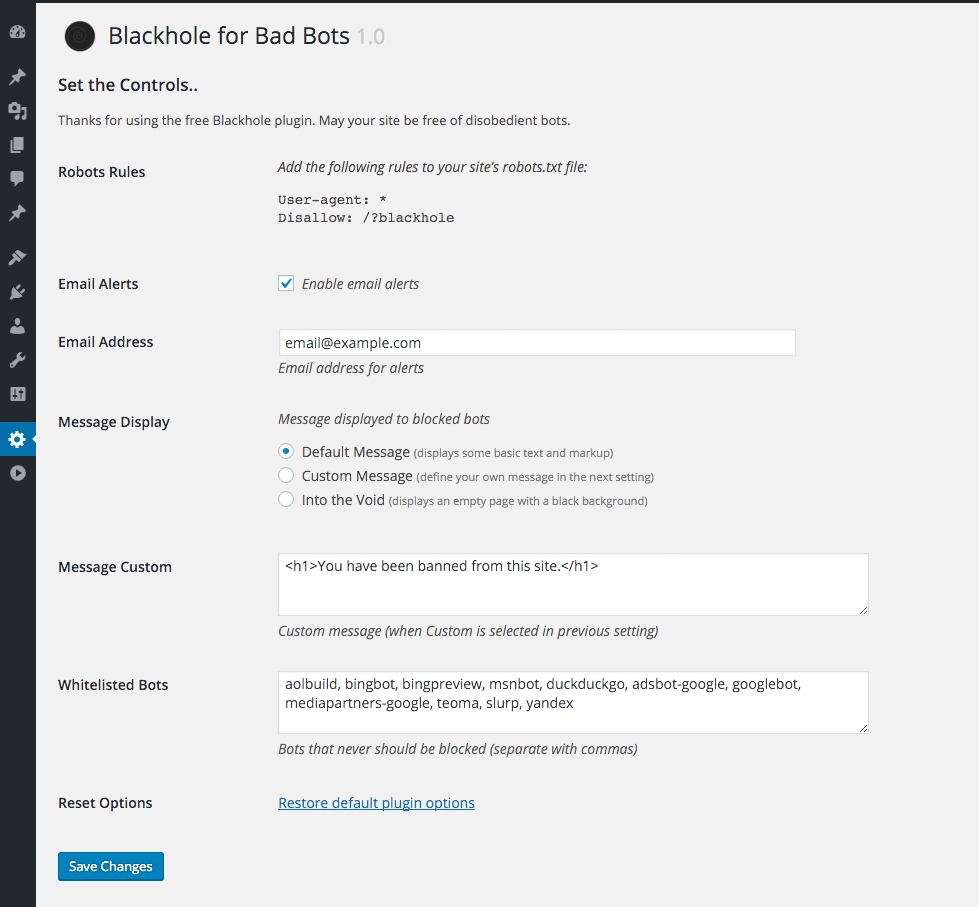

Bad bots may be blocked with the assistance of robots.txt file. This file consists of rules that each bot is supposed to obey, and one good thing you will do is instruct bots that pages on your WordPress website are out of limit. If a bot disobeys the rule and visits a page that’s excluded by the robots.txt file, it will be clear that it’s a bot with bad intentions. That is once it may be blocked by Blackhole for Bad Bots – a plugin with a clear and specific purpose. The plugin can add hidden links to pages on your website which may solely be seen by bots, not humans. Once you add a pattern into your robots.txt file that matches this one, bots can become simply noticed.

Another way to stay bad bots and spam bots off your website is to use the WordPress plugin AVH First Defense Against Spam. This plugin isn’t only effective to fight spam, it’s also a good way to block bad bots generally before they will “touch” your website.

2.Install WP Super Cache and use mod_rewrite for file caching

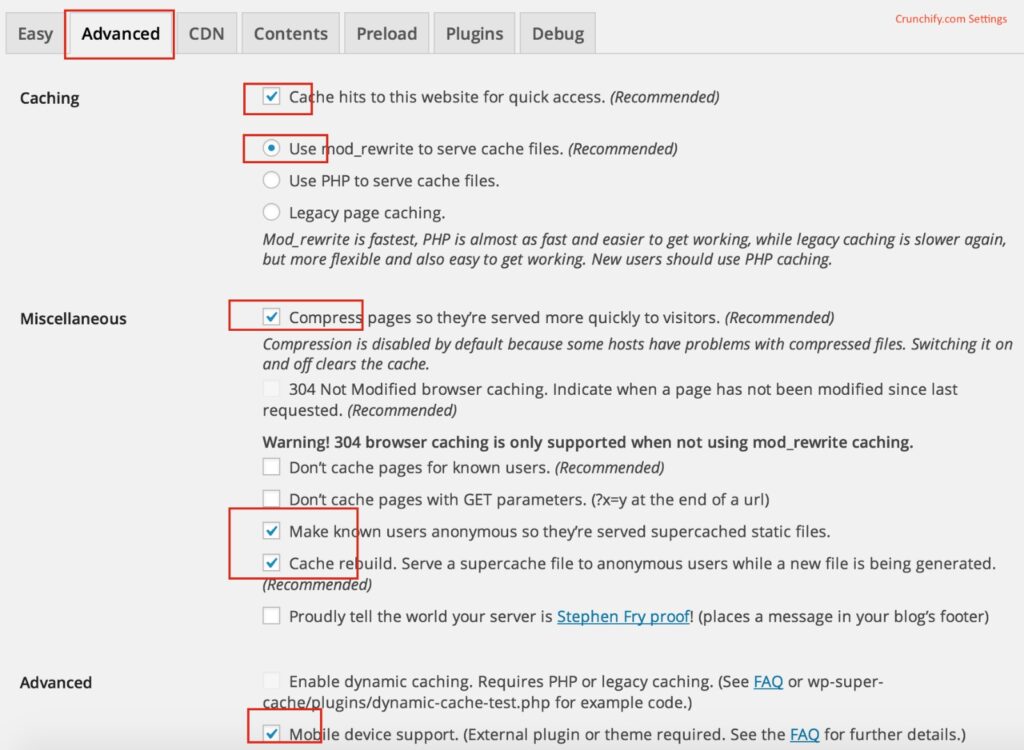

The easiest way to limit down the execution of PHP scripts is to use a cache plugin. A cache plugin creates a duplicate for every requested page or post and serves a cached file rather than recreating a page everywhere once more. WP Super Cache can be used for all WordPress websites, as a result of it is a well-maintained WordPress plugin that is extremely simple to put in. If you enter the WP Super Cache settings page, check the advanced tab for the three choices for caching: mod_rewrite, PHP and legacy caching. Do not ever use the PHP option, because some PHP code is involved whenever a file cache is made or requested. The mod_rewrite possibility is way better because the file cache is made, no PHP code is needed to serve the cached page or post to the shopper or browser.

3.Protect Your Website Against Brute Force Attacks (Login)

The login page is additionally a PHP script that wants memory for the execution While several CAPTCHA or JavaScript primarily based plugins still offer access to the PHP login script, Brute Protect can abandon a bot before most of the PHP code in WordPress is executed.

• Hide the wp-login.php page and wp-admin directory

Another effective technique is that the WP Cerber plugin. While using this plugin it’s possible to vary the WordPress login URL to something you prefer. Besides this feature, it is possible to “hide” the wp-admin directory still. The plugin works in multiple ways: The non-standard login URL does not work and also the IP address is blocked when some hacking attempts. WP Cerber may be a nice plugin for many websites with a group of users.

4.Htaccess File to Block Bad Bots

You can block one bad bot from accessing your WordPress by using an htaccess file. By using the htaccess example below, we will block a bot with the user-agent string evil bot.

RewriteEngine on

RewriteCond %{HTTP_USER_AGENT} ^evil

RewriteRule ^(.*)$ http://no.access/

The htaccess sample is checking the user-agent of the bad bot, and if it matches evil it’ll be redirected to a non-existing web site http://www.no.access. If you’d wish to block multiple bad bots from accessing your WordPress, use the [OR] operand within the htaccess file and add a line for every bad bot you wish to block, as shown within the below example.

RewriteEngine on

RewriteCond %{HTTP_USER_AGENT} ^evilbot [OR]

RewriteCond %{HTTP_USER_AGENT} ^spambot [OR]

RewriteCond %{HTTP_USER_AGENT} ^virusbot

RewriteRule ^(.*)$ http://no.access/

Bad bots are like pests; it is not possible to eliminate them. You will have the foremost in an extensive list of bad bots however new ones can seem daily. I wrote this text, you’ll effectively block bad bots that are dangerously moving your WordPress website and to not block all the bad bots on the web.

5. Show an easy 404 – NOT FOUND error exploitation .htaccess

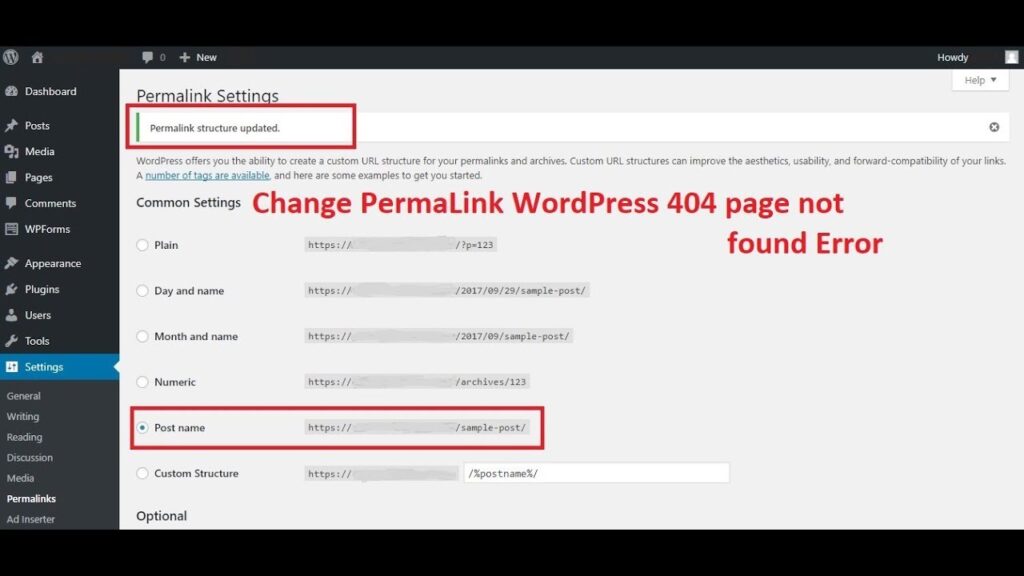

The last protection for your WordPress website is critical due to some general mod_rewrite rule that exists for (almost) each WordPress installation. It is these two mod_rewrite conditions in your .htaccess file, that are created by WordPress if you set up SEO friendly permalinks.

# condition to check if a file doesn't exists

RewriteCond %{REQUEST_FILENAME} !-f

# condition to check if a directory doesn't exists

RewriteCond %{REQUEST_FILENAME} !-d

These mod_rewrite rules do not check if a requested file belongs to a post or pages. If a bad bot is sniffing on your web site for a few files (PHP, CSS, txt, js, png…) and also the file does not exist, WordPress can produce a 404 page. This page does not have a file cache and all the info queries and PHP code. Imagine memory is used, if a bot is attempting to access one hundred missing files during a single minute! The following rules can be used in my .htaccess file (paste it on top of the default code from WordPress) to stop the creation of unwanted 404 pages by WordPress:

<IfModule mod_rewrite.c>

RewriteEngine On

RewriteBase /

RewriteCond %{REQUEST_FILENAME} !-f

RewriteCond %{REQUEST_URI} \.(jpg|jpeg|png|gif|bmp|ico|css|js|swf|htm|html|txt|php)$ [NC]

RewriteRule .* - [R=404]

</IfModule>

It is possible that; mod_rewrite condition and rule do not work for 100% in your specific case. It is better to undertake these crucial modifications on a test location. Check your log files often and take action if you’re thinking that some activity on your website isn’t normal. You would possibly be surprised how many bots threaten your web site daily. Website speed is crucial lately and it İs important to serve your pages quickly to your visitors.